ExtraHop Discover Appliance GCP Deployment With Terraform

published: 27th of March 2022

Intro

I was recently working with a customer to deploy an ExtraHop Cloud Sensor (EDA) in Google Cloud Platform (GCP) with Terraform. Deploying in the cloud is not as straight forward as deploying in other virtualized environments such as VMware. Thankfully, Terraform helps us complete this process via code so we don't have to die a slow death by a thousand clicks.

In this post, I will show you how to deploy an EDA in GCP with Terraform.

Software

The following software was used in this post.

- Terraform - 1.1.7

- gcloud - 378.0.0

- ExtraHop Discover Appliance - 8.8.0

Pre-Flight Check

Terraform and gcloud

This post assumes that you already have Terraform and the gcloud CLI tools installed and setup. For instructions on how to set these tools up check out this post.

EDA Deployment File

An EDA deployment file for GCP which can be downloaded from the customer portal. The deployment file should be saved in the same location as your main.tf file.

EDA License

Not strictly required to deploy the instance, but to enable the capture interface on the EDA, a license is required.

Lab Topology

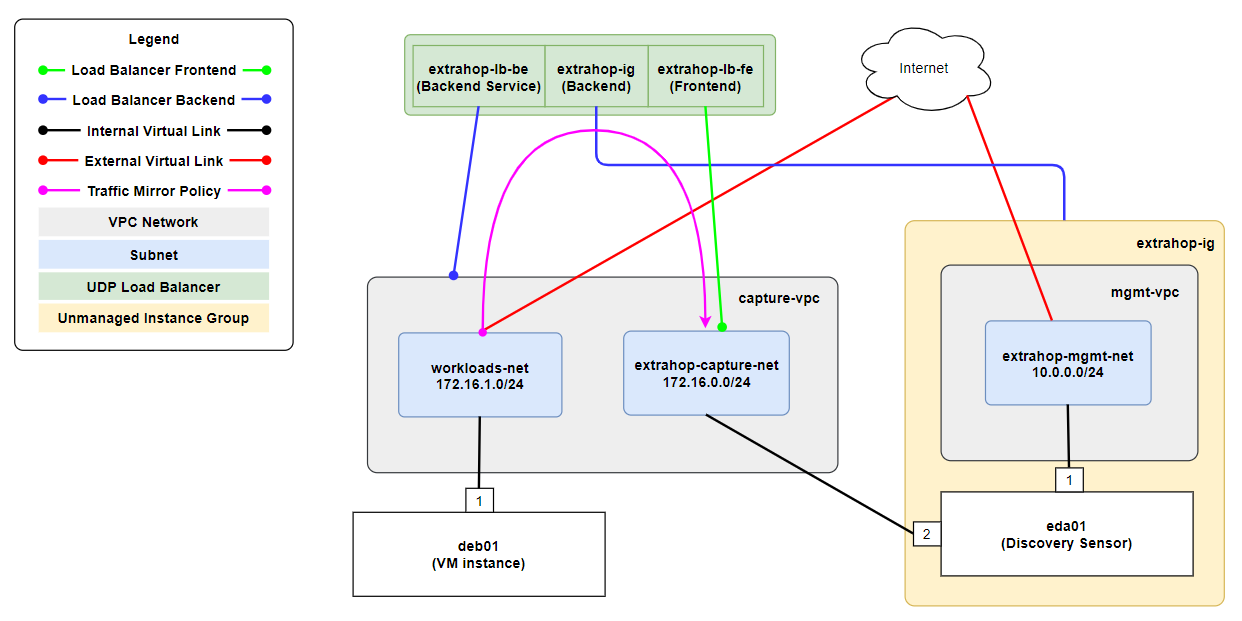

In this post, we will be building the following infrastructure.

The following points summarize this above topology.

- There are 2 Virtual Private Clouds (VPC):

- mgmt-vpc for the manamgement interface of an EDA.

- capture-vpc for workloads that have their traffic mirrored to an EDA and the EDA capture interface.

- The mgmt-vpc has a single subnet 10.0.0.0/24 .

- The capture-vpc has 2 subnets:

- 172.16.0.0/24 for the capture target.

- 172.16.1.0/24 for workload VMs.

- eda01 is the ExtraHop sensor that is capturing packets.

- deb01 is a workload VM that has its traffic mirrored to eda01 .

- The instance group extrahop-ig is required to group the mgmt-vpc VPC extrahop-mgmt-net subnet and eda01 VM together for use as a load balancer backend.

- A load balancer is required to mirror traffic between VMs in GCP. The load balancer has the following components:

- A backend service extrahop-lb-be that's attached to capture-vpc VPC.

- A backend that's attached to the extrahop-ig instance group.

- A frontend extrahop-lb-fe that's attached to the extrahop-capture-net subnet.

- A traffic mirror policy (denoted by the pink line) is used to mirror traffic from the deb01 VM to the load balancer frontend.

- eda01 and deb01 have external IP addresses assigned (denoted by the red line) allowing them to access the internet.

Terraform Code

For simplicity I have placed all of the code for this post in the main.tf file. The code is split into sections denoted by comments (// ).

If you are following along, you will need to update the Variables section to suit your environment.

// Variables

variable "gcp_project" {

type = string

default = "<project-name>-<project-id>"

}

variable "gcp_region" {

type = string

default = "australia-southeast1"

}

variable "gcp_zone" {

type = string

default = "australia-southeast1-a"

}

variable "storage_bucket_name" {

type = string

default = "extrahop-<random-id>"

}

variable "eda_version" {

type = string

default = "8.8.0.1450"

}

variable "my_public_ip" {

type = string

default = "<your-public-ip>/32"

}

// Provider

provider "google" {

project = var.gcp_project

region = var.gcp_region

zone = var.gcp_zone

}

// Storage Bucket

resource "google_storage_bucket" "extrahop_bucket" {

name = var.storage_bucket_name

location = var.gcp_region

force_destroy = true

}

resource "google_storage_bucket_object" "eda_image" {

name = "extrahop-eda-gcp-${var.eda_version}.tar.gz"

source = "extrahop-eda-gcp-${var.eda_version}.tar.gz"

bucket = var.storage_bucket_name

}

// VPCs

resource "google_compute_network" "mgmt_vpc" {

name = "mgmt-vpc"

auto_create_subnetworks = false

}

resource "google_compute_subnetwork" "extrahop_mgmt_net" {

name = "extrahop-mgmt-net"

ip_cidr_range = "10.0.0.0/24"

region = var.gcp_region

network = google_compute_network.mgmt_vpc.id

}

resource "google_compute_network" "capture_vpc" {

name = "capture-vpc"

auto_create_subnetworks = false

}

resource "google_compute_subnetwork" "extrahop_capture_net" {

name = "extrahop-capture-net"

ip_cidr_range = "172.16.0.0/24"

region = var.gcp_region

network = google_compute_network.capture_vpc.id

}

resource "google_compute_subnetwork" "workloads_net" {

name = "workloads-net"

ip_cidr_range = "172.16.1.0/24"

region = var.gcp_region

network = google_compute_network.capture_vpc.id

}

// Firewall Rules

resource "google_compute_firewall" "allow_me_to_eda" {

name = "allow-me-to-eda"

network = google_compute_network.mgmt_vpc.name

direction = "INGRESS"

allow {

protocol = "all"

}

source_ranges = [var.my_public_ip]

}

resource "google_compute_firewall" "allow_me_to_deb" {

name = "allow-me-to-deb"

network = google_compute_network.capture_vpc.name

direction = "INGRESS"

allow {

protocol = "all"

}

source_ranges = [var.my_public_ip]

}

resource "google_compute_firewall" "allow_workload_to_capture_in" {

name = "allow-workload-to-capture-in"

network = google_compute_network.capture_vpc.name

direction = "INGRESS"

allow {

protocol = "all"

}

source_ranges = ["172.16.1.0/24"]

}

// eda01

resource "google_compute_instance" "eda01" {

name = "eda01"

machine_type = "e2-medium"

zone = var.gcp_zone

boot_disk {

source = google_compute_disk.eda01_datastore.self_link

}

attached_disk {

source = google_compute_disk.eda01_pcap.self_link

}

network_interface {

network = google_compute_network.mgmt_vpc.name

subnetwork = google_compute_subnetwork.extrahop_mgmt_net.name

access_config {

network_tier = "STANDARD"

}

}

network_interface {

network = google_compute_network.capture_vpc.name

subnetwork = google_compute_subnetwork.extrahop_capture_net.name

}

}

resource "google_compute_instance_group" "extrahop_ig" {

name = "extrahop-ig"

zone = var.gcp_zone

network = google_compute_network.mgmt_vpc.id

instances = [

google_compute_instance.eda01.self_link,

]

}

// deb01

resource "google_compute_instance" "deb01" {

name = "deb01"

machine_type = "e2-medium"

zone = var.gcp_zone

boot_disk {

initialize_params {

image = "debian-cloud/debian-9"

}

}

network_interface {

network = google_compute_network.capture_vpc.name

subnetwork = google_compute_subnetwork.workloads_net.name

access_config {

network_tier = "STANDARD"

}

}

}

// Load balancer

resource "google_compute_health_check" "extrahop_hc" {

name = "extrahop-hc"

check_interval_sec = 15

timeout_sec = 5

tcp_health_check {

port = "443"

}

}

resource "google_compute_region_backend_service" "extrahop_lb_be" {

name = "extrahop-lb-be"

region = var.gcp_region

protocol = "UDP"

network = google_compute_network.capture_vpc.self_link

health_checks = [google_compute_health_check.extrahop_hc.id]

backend {

group = google_compute_instance_group.extrahop_ig.self_link

}

}

resource "google_compute_forwarding_rule" "extrahop_lb_fe" {

name = "extrahop-lb-fe"

region = var.gcp_region

load_balancing_scheme = "INTERNAL"

backend_service = google_compute_region_backend_service.extrahop_lb_be.id

ip_protocol = "UDP"

all_ports = true

network = google_compute_network.capture_vpc.name

subnetwork = google_compute_subnetwork.extrahop_capture_net.name

is_mirroring_collector = true

}

// Traffic Mirror

resource "google_compute_packet_mirroring" "extrahop_pm" {

name = "extrahop-pm"

region = var.gcp_region

network {

url = google_compute_network.capture_vpc.self_link

}

collector_ilb {

url = google_compute_forwarding_rule.extrahop_lb_fe.self_link

}

mirrored_resources {

subnetworks {

url = google_compute_subnetwork.workloads_net.self_link

}

}

filter {

cidr_ranges = []

direction = "BOTH"

ip_protocols = []

}

}Build

Now, building the environment is a simple as running the terraform apply command.

terraform apply -auto-approve

# Output

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

.

. output omitted

.

Apply complete! Resources: 20 added, 0 changed, 0 destroyed.Post Deployment

Once the deployment is complete, there are a couple of manual tasks required to enable the EDA to capture traffic.

Apply License

For an EDA to enable its capture interface a license must be applied. A license can be purchased from your ExtraHop account team.

Capture Settings

The default discovery mode for an EDA is Layer 2 discovery, which discovers devices in its broadcast domain. There is no broadcast domain in a VPC. Therefore, we need to enable Layer 3 discovery and, define the subnets that contain devices we want to track as an asset.

To change the device discovery mode and set the remote networks (subnets), navigate to:

To enable L3 discovery, under the Local Device Discovery section, tick the Enable local device discovery checkbox.

To configure remote networks, under the Remote Device Discovery section, enter the desired prefixes. We will add 172.16.1.0/24 from our workloads-net subnet.

Once layer 3 discovery is enabled and the config is saved, restart the capture service. To restart the capture, naviage to:

Now click the Restart action for the Capture Status item.

Verification

Lets confirm that the eda01 can see deb01 in its data feed.

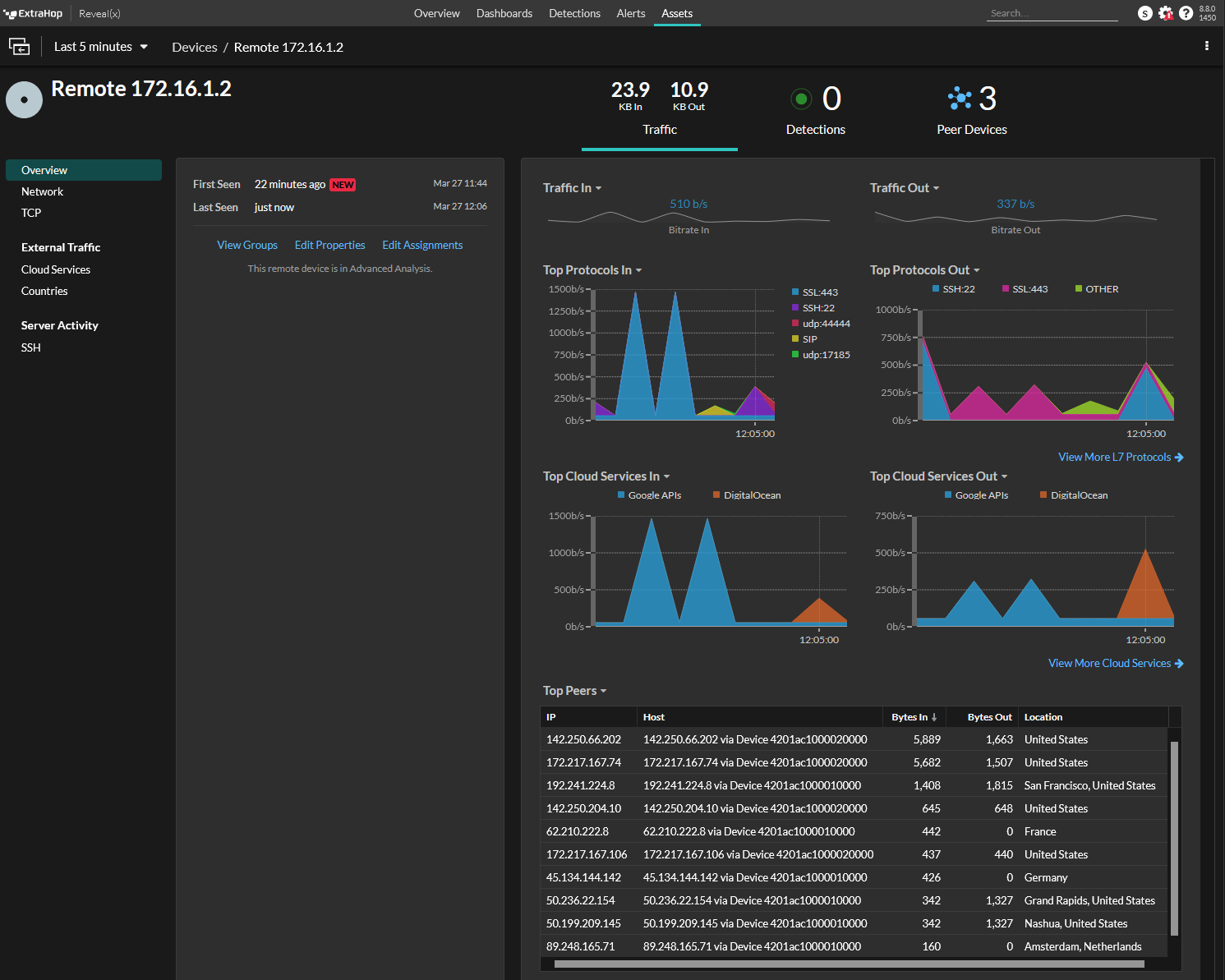

deb01 received the IP address 172.16.1.2 via DHCP. In the UI search bar on the top right, search for the IP 172.16.1.2 . Clicking into the result takes you to the asset page.

We can see that 172.16.1.2 is discovered as a remote device and is having its traffic analized.

Clean Up

Don't forget to run terraform destroy to clean up all the infrastructure and save yourself some $DOLLARS .

Cost

I was working no this post on and off for 2 days. I destroyed the infrastructure when I wasn't working on it to keep the costs down. All up, it cost $1.61 AUD to build and test this lab while also writing this blog post.

Outro

In this post, I showed you how to deploy an ExtraHop Discover Appliance on Google Cloud Platform using Terraform. This was a pretty fun one for me, thank you for joining me on this journey.

If this post was of interest, look out for a future post, where I will add Google Big Query as a record store in order to save detailed transaction data that can be queried from the EDA.

Links

https://docs.extrahop.com/current/deploy-eda-gcp/

https://docs.extrahop.com/current/deploy-eda-gcp/

https://cloud.google.com/vpc/docs/using-packet-mirroring

https://cloud.google.com/vpc/docs/using-firewalls